Migrate module makes the assumption that for each item in your source, you're importing one item into Drupal, and that's baked in pretty deep.

So how do you handle source items where there is body text containing multiple inline images, which should be imported to file entities? For each single source item, ultimately imported as a node, you also have a variable number of images: a one-to-many source to destination relationship.

One way is to simply import the image files at the same time as the nodes whose body text they are in. In the prepareRow() method of your migration class, you effectively do a mini-import, analysing the text, fetching the file data, saving the file locally, and then getting a file ID that you can use to replace into the body text. But doing that, you don't benefit from any of Migrate's helper classes for importing files, nor can you roll these back without writing further code: this isn't a Migration, capital M, it's just an import.

The better way is to write a second migration, to run before your node migration. It may seem like extra work to have to write a second migration class, but it pays off, and besides, since they both draw from the same source data, a lot of your code is already written. Copy-paste the class definition and the constructor as far as the field mappings. The code you would have put in prepareRow() to analyse the text goes somewhere else, but we're getting ahead of ourselves.

And it turns out that Migrate does allow for your single source items to yield multiple destination items: tucked away in the module's source classes and not mentioned in the examples (which do cover a great deal, are probably the most extensive examples of any contrib module all the same, it must be said) there are at least two (that I've found) places where the one-to-one correspondence can be skirted around.

The one that I used is only available when you use the MigrateSourceList source type, and furthermore when your list source is a set of files. As it happened, the source data I was working with on my project came in JSON files, one file per node to import, and with a body text field which contained references to image files which also needed to be imported.

MigrateSourceList is a source type that separates out the two concepts of listing your source items and processing each one. So unlike, say, the CSV source where the list and the item processing are both provided by the one class, with MigrateSourceList, you can say something like 'my list source is a directory listing, and each item is a JSON file', or 'my list source is a JSON file, and each item is an HTML file'. The MigrateSourceList class delegates the two jobs to further classes, which allows you to mix and match them. Your migration specifies them in the constructor:

$this->source = new MigrateSourceList(new MigrateListFiles($list_dirs, $base_dir),

new MigrateItemFile($base_dir), $fields);

There's one more component in the system, and this is the crucial piece that allows us to have more than one destination item per source item: it's the MigrateContentParser class. This allows the files that MigrateListFiles to each return multiple items to MigrateSourceList.

The only implementation of this in Migrate is MigrateSimpleContentParser, which doesn't do much, so you'll want to subclass this for your particular case. It's fairly simple:

- setContent() — perform any processing on the content of the file. In my case, I needed to run it through drupal_json_decode() and grab the body text field, since the whole file was JSON representing a node.

- getChunkCount() — process the file content to return a count of how many items for migration are contained in it.

- getChunkIDs() — similar to getChunkCount(), but return IDs. You will probably end up using a common helper method for this and getChunkCount(), as they do the same sort of work. The IDs you return can be anything you like; in my case they were GUIDs. They are appended to the ID for the file to form the overall source ID.

- getChunk() — given a chunk ID (one of the ones you provided in getChunkIDs()), return the actual data for that item. Again, you may want to use a common helper. In my case, here I merely returned the ID itself, since the images to migrate were on a remote server and accessed by their GUID.

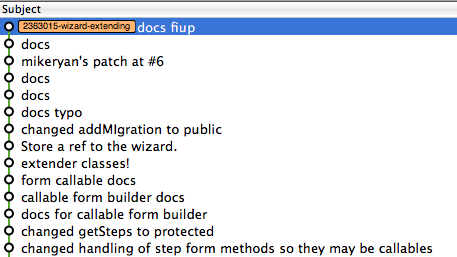

I submitted a patch to make it a bit easier to deal with the case where files might contain either one or many chunks (or even none): by default, a file providing only one chunk doesn't get to return a chunk ID, which wasn't working for my case. The patch (committed but not yet in a release) adds an option to override this so you always know the ID of the chunk: in my case, the GUID for the image found inside the body text, which was always needed by the image migration code.

At the other end, I needed a custom subclass of MigrateItem to deal with the data returned by getChunk(). This just needs to implement getItem(), and it can pretty much return anything you like: this is the same source data item that your migration class gets to work with.

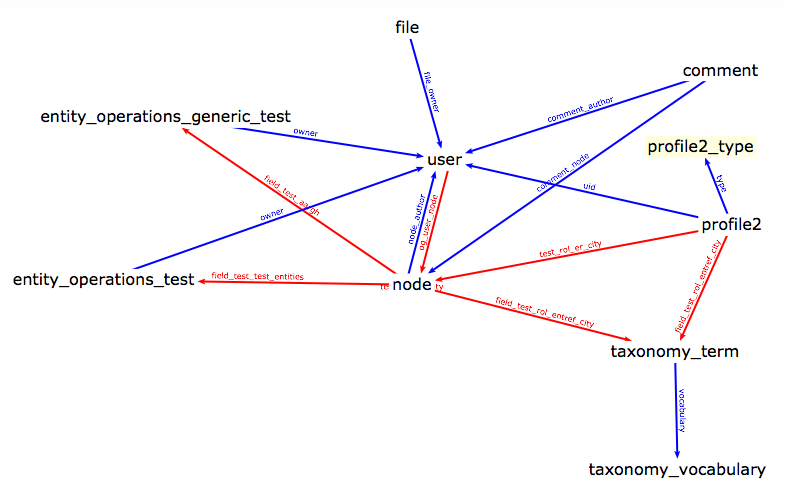

So to recap, as there's quite a few classes flying around helping one another here, we have MigrateListFiles which uses a custom MigrateContentParser implementation to extract items from the source data files, with possibly more than one item from a single file, and then MigrateSourceList uses MigrateListFiles along with a custom MigrateItem implementation.

The setup code in my migration's constructor then looks like this:

$parser = new CustomJSONBodyImagesParser();

$list = new MigrateListFiles(

// $list_dirs

array($source_folder),

// $base_dir

$source_folder,

// $file_mask

'//',

// $options

array(),

// MigrateContentParser $parser

$parser

);

$item = new CustomImageGUIDItem();

$this->source = new MigrateSourceList($list, $item, $fields);

The end result worked great, and the ability to rollback turned out to be very useful, when there turned out to be bad data here and there that needed to be cleaned up or skipped. But that's what always happens with migrations in my experience!