Drupal Planet Posts

Getting more than you bargained for: removing a Drupal module with Composer

It's no secret that I find Composer a very troublesome piece of software to work with.

I have issues with Composer on two fronts. First, its output is extremely user-unfriendly, such as the long lists of impenetrable statements about dependencies that it produces when it tells you why it can't make a change you request. Second, many Composer commands have unwanted side-effects, and these work against the practice that changes to your codebase should be as simple as possible for the sake of developer sanity, testing, and user acceptance.

I recently discovered that removing packages is one such task where Composer has ideas of its own. A command such as remove drupal/foo will take it on itself to also update some apparently unrelated packages, meaning that you either have to manage the deployment of these updates as part of your uninstallation of a module, or roll up your sleeves and hack into the mess Composer has made of your codebase.

Guess which option I went for.

Step 1: Remove the module you actually want to remove

Let's suppose we want to remove the Drupal module 'foo' from the codebase because we're no longer using it:

$ composer remove drupal/foo

This will have two side effects, one of which you might want, and one of which you definitely don't.

Side effect 1: dependent packages are removed

This is fine, in theory. You probably don't need the modules that are dependencies of foo. Except... Composer knows about dependencies declared in composer.json, which for Drupal modules might be different from the dependencies declared in module info.yml files (if maintainers haven't been careful to ensure they match). UPDATE: I've been informed in comments that drupal.org's packaging process ensures these are kept in sync. So that's one less thing to worry about!

Furthermore, Composer doesn't know about Drupal configuration dependencies. You could have the situation where you installed module Foo, which had a dependency on Bar, so you installed that too. But then you found Bar was quite useful in itself, and you've created content and configuration on your site that depends on Bar. Ideally, at that point, you should have declared Bar explicitly in your project's root composer.json, but most likely, you haven't.

So at this point, you should go through Composer's output of what it's removed, and check your site doesn't have any of the Drupal modules enabled.

I recommend taking the list of Drupal modules that Composer has just told you it's removed in addition to the requested one, and checking its status on your live site:

$ drush pml | ag MODULE

If you find that any modules are still enabled, then revert the changes you've just made with the remove command, and declare the modules in your root composer.json, copying the declaration from the composer.json file of the module you are removing. Then start step 1 again.

Side effect 2: unrelated packages are updated

This is undesirable basically because any package update is something that has to be evaluated and tested before it's deployed. Having that happen as part of a package removal turns what should be a straight-forward task into something complex and unpredictable. It's forcing the developer to handle two operations that should be separate as one.

(It turns out that the maintainers of Composer don't even consider this to be a problem, and as I have unfortunately come to expect, the issue on github is a fine example of bad maintainership (for the nadir, see the issue on the use of JSON as a format for the main composer file) -- dismissing the problems that users explain they have, claiming the problems are by design, and so on.)

So to revert this, you need to pick apart the changes Composer has made, and reverse some of them.

Before you go any further, commit everything that Composer changed with the remove command. In my preferred method of operation, that means all the files, including the modules folder and the vendor folder. I know that Composer recommends you don't do that, but frankly I think trusting Composer not to damage your codebase on a whim is folly: you need to be able to back out of any mess it may make.

Step 2: Repair composer.lock

The composer.lock file is the record of how the packages currently are, so to undo some of the changes Composer made, we undo some of the changes made to this file, then get Composer to update based on the lock.

First, restore version of composer.lock to how it was before you started:

$ git checkout HEAD^ composer.lock

Unstage it. I prefer a GUI for git staging and unstaging operations, but on the command line it's:

$ git reset composer.lock

Your composer lock file now looks as it did before you started.

Use either git add -p or your favourite git GUI to pick out the right bits. Understanding which bits are the 'right bits' takes a bit of mental gymnastics: overall, we want to keep the changes in the last commit that removed packages completely, but we want to discard the changes that upgrade packages.

But here we've got a reverted diff. So in terms of what we have here, we want to discard changes that re-add a package, and stage and commit the changes that downgrade packages.

When you're done staging you should have:

- the change to the content hash should be unstaged.

- chunks that are a whole package should be unstaged

- chunks that change version should be staged (be sure to get all the bits that relate to a package)

Then commit what is staged, and discard the rest.

Then do a git diff of composer.lock against your starting point: you should see only complete package removals.

Step 3: Restore packages with unrelated changes

Finally, do:

$ composer update --lock

This will restore the packages that Composer updated against your will in step 1 to their original state.

If you are committing Composer-managed packages to your repository, commit them now.

As a final sanity check, do a git diff against your starting point, like this:

$ git diff --name-status master

You should see mostly deleted files. To verify there's nothing that shouldn't be there in the changed files, do:

$ git diff --name-status master | ag '^[^D]'

You should see only composer.json, composer.lock, and the autoloader's files.

PS. If I am wrong and there IS a way to get Compose to remove a package without side-effects, please tell me.

I feel I have exhausted all the options of the remove command:

- --no-update only changes composer.json, and makes no changes to package files at all. I'm not sure what the point of this is.

- --no-update-with-dependencies only removes the one package, and doesn't remove any dependencies that are not required anywhere else. This leaves you having to pick through composer.json files yourself and remove dependencies individually, and completely obviates the purpose of a package manager!

Why is something as simple as a package removal turned into a complex operation by Composer? Honestly, I'm baffled. I've tried reasoning with the maintainers, and it's a brick wall.

PPS. Since writing this post, I’ve made Composer Manifest a small Composer plugin which makes it easier to see what Composer has decided to change behind your back. Every time you do a Composer update, install, or remove, it writes a YAML file that lists all the installed packages with their versions. Committing that to your repository means you have an easy way to see exactly what's been changed and when.

Drupal Code Builder: the analytical, adaptive code generator

It's now just over 10 years since webchick handed maintainership of the Module Builder project over to me. I can still remember nervously approaching her at the end of a day at DrupalCon 2008, outside the building where people were chatting, and asking her if she'd had time to look at the patches I'd posted for it. I was at my first DrupalCon; she'd just been named as the core co-maintainer for the work about to start on Drupal 7. No, she hadn't, she said, and she instantly came to the conclusion that she'd never have the time to look at them, and so got her laptop out of her rucksack and there and then edited the project node to make me the maintainer.

Given the longevity of the project, I am often surprised when I talk to people that they don't know what its crucial advantage is over the other Drupal code generating tools that now also exist. Drupal Code Builder, as it's now called, is fundamentally different from Drupal Console's Generator command, and the Drupal Code Generator library that’s included in Drush 9.

I feel that perhaps this requires a buzzword, to make it more noticeable and memorable.

So here is one: Drupal Code Builder is an analytical code generator.

I'm going to add a second one: the Drupal Code Builder Drush commands and Module Builder (which still exists, though is now just module that provides a Drupal-based UI for the Drupal Code Builder library) are adaptive.

What do I mean by those?

When you first install Drupal Code Builder, it has no information about Drupal hooks, or plugin types, or services. There is no data in the codebase on how to generate a hook_form_alter() implementation, or a Block plugin, or how to inject the entity_type.manager service.

Before you can generate code, you need to run a command or submit an admin form which will analyse your entire codebase, and store the resulting data on hooks, plugin types, services, and other Drupal structures (the list of what is analysed keeps growing.).

The consequence of this is huge: Drupal Code Builder is not limited to core hooks, or to the hooks that it's been programmed for. Drupal Code Builder knows all the hooks in your codebase: from core, from contrib module, even from any of your own custom code that invents hooks.

Now repeat that statement for plugin types, for services, and so on.

And that analysis process can, and should, be repeated when you download a new module, because then any Drupal structures (hooks, plugin types, etc) that are defined in new modules gets added to the list of what Drupal Code Builder can generate.

This means that you can use Drupal Code Builder to do something like this:

- Generate a new plugin type. This creates a plugin manager service class and declaration, an annotation class that defines the plugin annotation, and an interface and base class for the plugins.

- Re-run DCB's analysis.

- Generate a plugin of the type you've just made.

When I say that this technique of analysis makes Drupal Coder Builder more powerful than other code generators, I'm not even blowing my own trumpet: the module I was handed in 2008 did the same. Back then in the Drupal 6 era is needed to connect to drupal.org's CVS repository to download the api.php files that document a module's hooks (which is why the Drush command for updating the code analysis prior to Drush 9 is called mb-download). For Drupal 7, the api.php files were moved into the core Drupal codebase, so from that point the process would scan the site's codebase directly. For Drupal 8, I added lots more code analysis, of plugin types and services, but still, the original idea remains: Drupal Code Builder knows how to detect Drupal structures, so it can know more structures than can be hardcoded.

This analysis can get pretty complex. While for hooks, it's just a case of running a regex on api.php files, for detecting information about plugin types, all sorts of techniques are used, such as searching the class parentage of all the plugins of a type to try to guess whether there is a plugin base class. It’s not always perfect, and it could always use more refinement, but it’s a more powerful approach than hardcoding a necessarily finite number of cases. (If only plugin types were declared in some way, or if the documentation for them were systematic in the way hook documentation is, this would all be so much simpler, and much more importantly, it would be more accurate!)

My second buzzword, that UIs for Drupal Code Builder are adaptive, describes the way that neither of the Drush command nor the Drupal module need to know what Drupal Code Builder can build. They merely know the API for describing properties, and how to present them to the user, to then pass the input values to Drupal Code Builder to get the generated code.

This is analogous to the way that Form API doesn’t know anything about a particular form, just about the different kinds of form elements.

This isn’t as exciting or indeed relevant to the end-user, but it does mean that development on Drupal Code Builder can move quickly, as adding new things to generate doesn’t require coordinated releases of software packages for the UI. In fact, I think nearly every new release of Drupal Code Builder has featured some sort of new code generating functionality, while keeping the API the same.

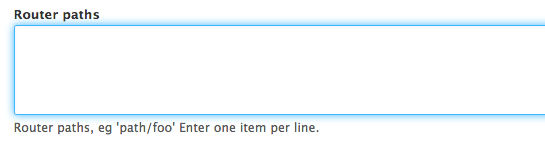

For example, this used to be the UI for adding a route on Drupal and Drush:

![]()

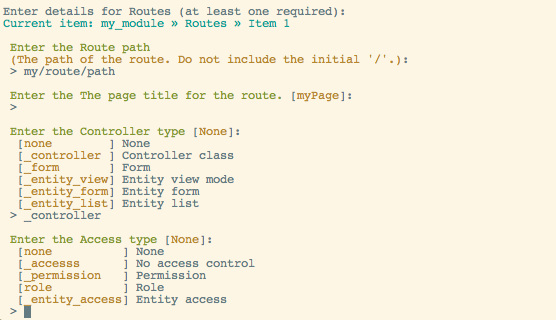

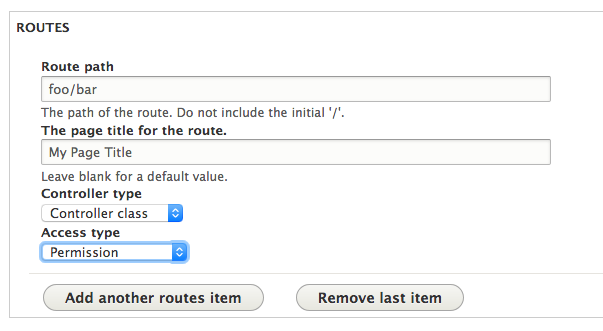

A later release of Drupal Code Builder turned the UI into this, without the Drush command or Module Builder needing their code to be updated:

Similarly, an even later version of Drupal Code Builder added code analysis to detect all top-level admin menu items, and so the admin settings form generation now lets you pick where to place the form in the menu.

It would be nice to think that a couple of buzzwords could gain Drupal Code Builder more attention and more users, but I fear that Drupal Console’s generator has got rather more traction in Drupal 8, despite its huge limitation. It’s disappointing that Drush has now added a third code generator to the mix, to even further dilute the ecosystem, and that it’s just as limited by hardcoding.

So where do we go from here?

Well, people get attached to UIs, but they don’t care much about what powers them, especially in this day and age of Composer, where adding dependencies no longer creates an imposition on the end-user.

So I suggest the following: the code analysis portion of Drupal Code Builder could be extracted to a new package. It doesn’t depend on anything on the code generation side, so that should be fairly simple. It provides an API that supports analysis being run in batches, which could maybe do with being spruced up, but it’s good enough for a 1.0.0 version for now.

Then, any code generating system would be able to use it. Both Console and Drush could replace their hardcoded lists of hooks and plugins with analytical data, while keeping the commands they expose to the end-user unchanged.

I’ll be at DrupalEurope in Darmstadt, where I’ll be running a BoF to discuss where we go next with code generation on Drupal.

Making builder code look like output code

One of the big challenges with updating Drupal Code Builder for Drupal 8 has been the sheer variety of code to be output. On earlier versions of Drupal, it was just about hooks, and all that needed to be done was to take the API documentation code and replace 'hook_' with the module name. There were info files too, and Drupal 7 added the placing of hooks into different .inc files, but compared to this, Drupal 8 has things like plugin annotations, fluent method calls for content entity baseFieldDefinitions(), FormAPI arrays, not to mention PHP class methods, and more.

But one of the things I enjoy about working on DCB is that I am free to experiment with different ideas, much more so than with work on core or even contrib. It is its own system, without any need to work with what a framework supplies, and it has no need to be extensible. So I can try a new way of doing things as often as I want, and clean up when I've had time to figure out which way works best.

For example, up until recently, the code for a field definition in baseFieldDefinitions() was getting generated in three different ways.

First, the old-fashioned way of doing it line by line, then concatenating the array with a "\n" to make the final code. This is the way most of the old code in DCB was done, but with things that need handling of terminal commas or semicolons, and nesting indents and so on, it was starting to get really clunky.

So then I tried writing something loosely inspired by Drupal's RenderAPI. Because that's a nice big hammer that seems to fit a lot of nails: make a big array of data, chuck your stuff into it, then hand it over to something that makes the output. Except, not so good. Writing the code to make the right sort of array was fiddly. The array of data needed to combine actual data and metadata (such as the class of an annotation), which added levels to the nesting.

Then I hit on an idea: baseFieldDefinitions() fields are a fluent interface, like this:

$fields['changed'] = BaseFieldDefinition::create('changed')

->setLabel(t('Changed'))

->setDescription(t('The time that the node was last edited.'))

->setRevisionable(TRUE)

->setTranslatable(TRUE);

What if the code that builds this could be the same, to the point where you could just copy-paste code from, say, the node entity class, and make a few tweaks? Creating the code in DCB would be much simpler, and having the DCB code look like the output code would make debugging easier too.

Using a class with the magic __call() method lets us have just that: a renderer object that treats a method call as some information about code to render. Here's what the builder code for the base field definition code looks like now:

$changed_field_calls = new FluentMethodCall;

$changed_field_calls

->setLabel(FluentMethodCall::t('Changed'))

->setDescription(FluentMethodCall::t('The time that the entity was last edited.'));

if ($use_revisionable) {

$changed_field_calls->setRevisionable(TRUE);

}

if ($use_translatable) {

$changed_field_calls->setTranslatable(TRUE);

}

$method_body = array_merge($method_body, $changed_field_calls->getCodeLines());

It's not yet perfect, as the first line isn't done by this, and the handling of the t() calls could do with some polish; probably by creating a separate class called something like FunctionCall, such that FunctionCall::somefunction() returns the code for a call to somefunction().

But the efficiency and elegance of this approach has led me to devise a new principle for DCB: builder code should look as much as possible like that code that it outputs.

So applying this approach to outputting annotations, the code now looks like this:

$annotation = ClassAnnotation::ContentEntityType([

'id' => 'cat',

'label' => ClassAnnotation::Translation("Cat"),

'label_count' => ClassAnnotation::PluralTranslation([

'singular' => "@count content item",

'plural' => "@count content items",

]),

]);

$annotation_lines = $annotation->render();

Magic methods used there as well, this time for static calls. The similarity to the output code isn't as good, as annotations aren't PHP code, but it's still close enough that you can copy the code you want to output, make a few simple changes, and you have the builder code.

This work has embodied another principle that I've come to follow: complexity and ugliness should be pushed down, hidden, and encapsulated. Here, the ClassAnnotation and FluentMethodCall have to do fiddly stuff like quoting string values, recurse into nested arrays. They have to handle special cases, like the last line of a fluent call has a semicolon and the last line of an annotation has no comma. All of that is hidden from the code that uses them. That can get on with doing the interesting bits.

The quick and dirty debug module

There's a great module called the debug module. I'd give you the link… but it doesn't exist. Or rather, it's not a module you download. It's a module you write yourself, and write again, over and over again.

Do you ever want to inspect the result of a method call, or the data you get back from a service, the result of a query, or the result of some other procedure, without having to wade through the steps in the UI, submit forms, and so on?

This is where the debug module comes in. It's just a single page which outputs whatever code you happen to want to poke around with at the time. On Drupal 8, that page is made with:

- an info.yml file

- a routing file

- a file containing the route's callback. You could use a controller class for this, but it's easier to have the callback just be a plain old function in the module file, as there's no need to drill down a folder structure in a text editor to reach it.

(You could quickly whip this up with Module Builder!)

Here's what my router file looks like:

joachim_debug:

path: '/joachim-debug'

defaults:

_controller: 'joachim_debug_page'

options:

_admin_route: TRUE

requirements:

_access: 'TRUE'

My debug module is called 'joachim_debug'; you might want to call yours something else. Here you can see we're granting access unconditionally, so that whichever user I happen to be logged in as (or none) can see the page. That's of course completely insecure, especially as we're going to output all sorts of internals. But this module is only meant to be run on your local environment and you should on no account commit it to your repository.

I don't want to worry about access, and I want the admin theme so the site theme doesn't get in the way of debug output or affect performance.

If you sometimes want to see themed output, you can add a second route with a different path, which serves up the same content but without the admin theme option:

joachim_debug_theme:

path: '/joachim-debug-theme'

defaults:

_controller: 'joachim_debug_page'

requirements:

_access: 'TRUE'

The module file starts off looking like this:

opcache_reset();

function joachim_debug_page() {

$build = [

'#markup' => “aaaaarrrgh!!!!”,

];

/*

// ============================ TEMPLATE

return $build;

*/

return $build;

}

The commented-out section is there for me to quickly copy and paste a new section of code anytime I want to do something different. I always leave the old code in below the return, just in case I want to go back to it later on, or copy-paste snippets from it.

Back in the Drupal 6 and 7 days, the return of the callback function was merely a string. On Drupal 8, it has to be a proper render array. The return text used to be 'It's going wrong!' but these days it's the more expressive 'aaaaarrrgh'. Most of the time, the output I want will be the result of dsm() call, so the $build is there just so Drupal's routing system doesn't complain about a route callback not returning anything.

Here are some examples of the sort of code I might have in here.

// ============================ Route provider

$route_provider = \Drupal::service('router.route_provider');

$path = 'node/%/edit';

$rs = $route_provider->getRoutesByPattern($path);

dsm($rs);

return $build;

Here I wanted to see the what the route provider service returns. (I have no idea why, this is just something I found in the very long list of old code in my module's menu callback, pushed down by newer stuff.)

// ============================ order receipt email

$order = entity_load('commerce_order', 3);

$build = [

'#theme' => 'commerce_order_receipt',

'#order_entity' => $order,

'#totals' => \Drupal::service('commerce_order.order_total_summary')->buildTotals($order),

];

return $build;

I wanted to work with the order receipt emails that Commerce sends. But I don't want to have to make a purchase, complete and order, and then look in the mail logger just to see the email! But this is quicker: all I have to do is load up my debug module's page (mine is at the path 'joachim-debug', which is easy to remember for me; you might want to have yours somewhere else), and vavoom, there's the rendered email. I can tweak the template, change the order, and just reload the page to see the effect.

As you can see, it's quick and simple. There's no safety checks, so if you ever put code here that does something (such as an entity_delete(), it's useful for deleting entities in bulk quickly), be sure to comment out the code once you're done with it, or your next reload might blow up! And of course, it's only ever to be used on your local environment; never on shared development sites, and certainly never on production!

I once read something about how a crucial piece of functionality required for programming, and more specifically, for ease of learning to program with a language or a framework, is being able to see and understand the outcomes of the code you are writing. In Drupal 8 more than ever, being able to understand the systems you're working with is vital. There are tools such as debuggers and the Devel and Devel Contrib modules' information pages, but sometimes quick and dirty does the job too.

Regenerating plugin dependency injection with Module Builder

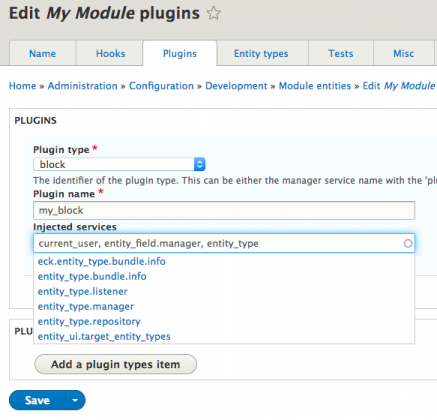

Dependency injection is a pattern that adds a lot of boilerplate code, but Drupal Code Builder makes it easy to add injected services to plugins, forms, and service classes.

Now that the Drupal 8 version of Module Builder (the Drupal front-end to the Drupal Code Builder library) uses an autocomplete for service names in the edit form, adding injected services is even easier, and any of the hundreds of services in your site’s codebase (443 on my local sandbox Drupal 8 site!) can be injected.

I often used this when I want to add a service to an existing plugin: re-generate the code, and copy-paste the new code I need.

This is an area in which Module Builder now outshines its Drush counterpart, because unlike the Drush front end for Drupal Code Builder, which generates code with input parameters every time, Module Builder lets you save your settings for the generated module (as a config entity).

So you can return to the plugin you generated to start with, add an extra service to it, and generate the code again. You can copy and paste, or have Module Builder write the file and then use git to revert custom code it’s removed. (The ability to insert generated code into existing files is on my list of desirable features, but is realistically a long way off, as it would be rather complex, a require the addition of a code parsing library.)

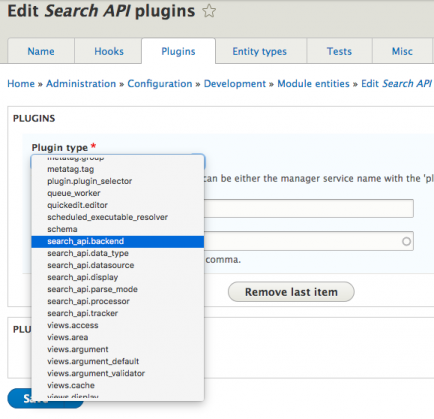

But why stop at generating code for your own modules? I recently filed an issue on Search API, suggesting that its plugins could do with tweaking to follow the standard core pattern of a static factory method and constructor, rather than rely on setters for injection. It’s not a complex change, but a lot of code churn. Then it occurred to me: Drupal Code Builder can generate that boilerplate code: simply create a module in Module Builder called ‘search_api’, and then add a plugin with the name of one that is already in Search API, and then set its injected services to the services the real plugin needs.

Drupal Code Builder already knows how to build a Search API plugin: its code analysis detects the right plugin base class and annotation to use, and also any parameters that the constructor method should pass up to the base class.

So it’s pretty quick to copy the plugin name and service names from Search API’s plugin class to the form in Module Builder, and then save and generate the code, and then copy the generated factory methods back to Search API to make a patch.

I’m now rather glad I decided to use config entities for generated entities. Originally, I did that just because it was a quick and convenient way to get storage for serialized data (and since then I discovered in other work that map fields are broken in D8 so I’m very glad I didn’t try to make then content entities!). But the ability to save the generating settings for a module, and then return to it to add to them has proved very useful.

Triggering events on the fly

As far as I know, there's nothing (yet) for triggering an arbitrary event. The complication is that every event uses a unique event class, whose constructor requires specific things passing, such as entities pertaining to the event.

Today I wanted to test the emails that Commerce sends when an order completes, and to avoid having to keep buying a product and sending it through checkout, I figured I'd mock the event object with Prophecy, mocking the methods that the OrderReceiptSubscriber calls (this is the class that does the sending of the order emails). Prophecy is a unit testing tool, but its objects can be created outside of PHPUnit quite easily.

Here's my quick code:

$order = entity_load('commerce_order', ORDER_ID);

$prophet = new \Prophecy\Prophet;

$event = $prophet->prophesize('Drupal\state_machine\Event\WorkflowTransitionEvent');

$event->getEntity()->willReturn($order);

$subscriber = \Drupal::service('commerce_order.order_receipt_subscriber');

$subscriber->sendOrderReceipt($event->reveal());

Could some sort of generic tool be created for triggering any event in Drupal? Perhaps. We could use reflection to detect the methods on the event class, but at some point we need some real data for the event listeners to do something with. Here, I needed to load a specific order entity and to know which method on the event class returns it. For another event, I'd need some completely different entities and different methods.

We could maybe detect the type that the event method return (by sniffing in the docblock... once we go full PHP 7, we could use reflection on the return type), and the present an admin UI that shows a form element for each method, allowing you to enter an entity ID or a scalar value.

Still, you'd need to look at the code you want to run, the event listener, to know which of those you'd actually want to fill in.

Would it same more time than cobbling together code like the above? Only if you multiply it by a sufficiently large number of developers, as is the case with many developer tools.

It's the sort of idea I might have tinkered with back in the days when I had time. As it is, I'm just going to throw this idea out in the open.

Drupal Code Builder unit testing: bringing in the heavy stuff

I started adding unit tests to Drupal Code Builder about 3 years ago, and I’ve been gradually expanding on them ever since. It’s made refactoring the code a pleasant rather than stressful experience.

However, while all generator types are covered, the level of detail the tests go into isn’t that deep. Back when I wrote the tests, they mostly needed to check for hook implementations that were generated, and so quick and dirty regexes on the generated code did the job: match 'mymodule_form_alter' in the generated code, basically. I gradually extended those to cover things like class declarations and methods, but that approach is very much cracking at the seams.

So it’s time to switch to something more powerful, and more suited to the task.

I’ve already removed my frankly hideous attempt at verifying that generated code is correctly-formed PHP, replacing it with a call to PHP’s own code linter. My own code was running the generated PHP code files through eval() (yes, I know!) to check nothing crashed, which was quick and worked but only up to a point: tests couldn’t create the same function twice, as eval()ing code that contains a function declaration brings it into the global namespace, and it didn’t work at all for classes where while tests were being run, as the parent classes in Drupal core or contrib aren't present.

It's already proved worthwhile, as once I'd converted the tests, I found an error in the generated code: a stray quote mark in base field definitions for a content entity, which my approach wasn't picking up, and never would have.

The second phase is going to be to use PHPCS and Drupal Coder to check that generated code follows Drupal Coding Standards. I'm currently getting that to work in my testing base class, although it might be a while before I push it, as I suspect it's going to complain about quite a few nipicks in the generated code that I'll then have to spend some time fixing.

The third phase (this is a 3-phrase programme, all the best ones are) is going to be to look into using PHP-Parser to check for the presence of functions and methods in the code, rather than my regex-based approach. This is going to allow for much more thorough checking of the generated code, with things such as method order in the code, service injection, and more.

After that, it'll be back to some more refactoring and code clean-up, and then some more code generators! I have a few ideas of what else Drupal Code Builder could generate, but more ideas are welcome in the issue queue on github.

Brief update on Drupal Code Builder

I've completely revamped the Drush commands for Drupal Code Builder:

- First, they're now in their own project on Github

- Second, I've rewritten them completely for Drush 9, completely interactive.

- Third, they are now geared towards adding to existing modules, rather than generating a module as a single shot. That approach made sense in the days of Drupal 6 when it was just hook implementations, but I increasingly find I want to add a plugin, a service, a form, to a module I've already started.

The downside is that installing these is rather tricky at the moment due to some current limitations in Drush 9 beta; see details in the project README, which has full instructions for workarounds.

Now that's out of the way, I'm pushing on with some new generators for the Drupal Code Builder library itself. On my list is:

- plugin types (as in the plugin manager service, base class and interface, and declaration for Plugins module)

- entity type

- entity type handlers

- your suggestions in the issue queue...

And of course more unit tests and refactoring of the codebase.

Dorgflow: a tool to automate your drupal.org patch workflow

I’ve written previously about git workflow for working on drupal.org patches, and about how we don’t necessarily need to move to a github-style system on drupal.org, we just maybe need better tools for our existing workflow. It’s true that much of it is repetitive, but then repetitive tasks are ripe for automation. In the two years since I released Dorgpatch, a shell script that handles the making of patches for drupal.org issues, I’ve been thinking of how much more of the drupal.org patch workflow could be automated.

Now, I have released a new script, Dorgflow, and the answer is just about everything. The only thing that Dorgflow doesn’t automate is uploading the patch to drupal.org (and that’s because drupal.org’s REST API is read-only). Oh, and writing the code to actually fix bugs or create new features. You still have to do that yourself, along with your cup of coffee.

So assuming you’ve made your own hot beverage of choice, how does Dorgflow work?

Simply! To start with, you need to have an up to date git clone of the project you want to work on, be it Drupal core or a contrib project.

To start work on an issue, just do:

$ dorgflow https://www.drupal.org/node/123456

You can copy and paste the URL from your browser. It doesn’t matter if it has an anchor link on the end, so if you followed a link from your issue tracker and it has ‘#new’ at the end, or clicked down to a comment and it has ‘#comment-1234’ that’s all fine.

The first thing this comment does it make a new git branch for you, using the issue number and the name. It then also downloads and applies all the patch files from the issue node, and makes a commit for each one. Your local git now shows you the history of the work on the issue. (Note though that if a patch no longer applies against the main branch, then it’s skipped, and if a patch has been set to not be displayed on the issue’s file list, then it’s skipped too.)

Let’s see how this works with an actual issue. Today I wanted to review the patch on an issue for Token module. The issue URL is https://www.drupal.org/node/2782605. So I did:

$ dorgflow https://www.drupal.org/node/2782605

That got me a git history like this:

* 6d07524 (2782605-Move-list-of-available-tokens-from-Help-to-Reports) Patch from Drupal.org. Comment: 35; URL: https://www.drupal.org/node/2782605#comment-11934790; file: token-move-list-of-available-tokens-2782605-34.patch; fid 5784728. Automatic commit by dorgflow.

* 6f8f6e0 Patch from Drupal.org. Comment: 15; URL: https://www.drupal.org/node/2782605#comment-11666939; file: 2782605-13.patch; fid 5710235. Automatic commit by dorgflow.

/

* a3b68cc (8.x-1.x) Issue #2833328 by Berdir: Handle bubbleable metadata for block title token replacements

* [older commits…]

What we can see here is:

- Git is now on a feature branch, called ‘2782605-Move-list-of-available-tokens-from-Help-to-Reports’. The first part is the issue number, and the rest is from the title of the issue node on drupal.org.

- Two patches were found on the issue, and a commit was made for each one. Each patch’s commit message gives the comment index where the patch was posted, the URL to the comment, the patch filename, and the patch file entity ID (these last two are less interesting, but are used by Dorgflow when you update a feature branch with newer patches from an issue).

The commit for patch 35 will obviously only show the difference between it and patch 15, an interdiff effectively. To see what the patch actually contains, take a diff from the master branch, 8.x-1.x.

(As an aside, the trick to applying a patch that’s against 8.x-1.x to a feature branch that already has commit for a patch is that there is a way to check out files from any git commit while still keeping git’s HEAD on the current branch. So the patch applies, because the files look like 8.x-1.x, but when you make a commit, you’re on the feature branch. Details are on this Stack Overflow question.)

At this point, the feature branch is ready for work. You can make as many commits as you want. (You can rename the branch if you like, provided the ‘2782605-’ part stays at the beginning.) To make your own patch with your work, just run the Dorgflow script without any argument:

$ dorgflow

The script detects the current branch, and from that, the issue number, and then fetches the issue node from drupal.org to get the number of the next comment to use in the patch filename. All you now have to do is upload the patch, and post a comment explaining your changes.

Alternatively, if you’re a maintainer for the project, and the latest patch is ready to be committed, you can do the following to put git into a state where the patch is applied to the main development branch:

$ dorgflow commit

At that point, you just need to obtain the git commit command from the issue node. (Remember the drupal standard git message format, and to check the attribution for the work on the issue is correct!)

What if you’ve previously reviewed a patch, and now there’s a new one? Dorgflow can download new patches with this command:

$ dorgflow update

This compares your feature branch to the issue node’s patches, and any patches you don’t yet have get new commits.

If you’ve made commits for your own work as well, then effectively there’s a fork in play, as your development in your commits and the other person’s patch are divergent lines of development. Appropriately, Dorgflow creates a separate branch. Your commits are moved onto this branch, while the feature branch is rewound to the last patch that was already there, and then has the new patches applied to it, so that it now reflects work on the issue. It’s then up to you to do a git merge of these two branches in order to combine the two lines of development back into one.

Dorgflow is still being developed. There are a few ideas for further features in the issue queue on github (not to mention a couple of bugs for some of the various possible cases the update command can encounter). I’m also pondering whether it’s worth the effort to convert the script to use Symfony Console; feel free to chime in with any opinions on the issue for that.

There are tests too, as it’s pretty important that a script that does things to your git repository does what it’s supposed to (though the only command that does anything destructive is ‘dorgflow cleanup’, which of course asks for confirmation). Having now written this, I’m obviously embarking upon cleaning it up and to some extent rewriting it, though I do have the excuse that the early weeks of working on this were the days after the late nights awake with my newborn daughter, and so the early versions of the code were written in a haze of sleep deprivation. If you’d like to submit a pull request, please do check in with me first on an issue to ensure it’s not going to clash with something I’m partway through changing.

Finally, if you find this as useful as I do (this was definitely an itch I’ve been wanting to scratch for a long time, as well as being a prime case of condiment-passing), please tell other Drupal developers about it. Let’s all spend less time downloading, applying, and rolling patches, and more time writing Drupal code!

Changing the type of a node

There’s an old saying that no information architecture survives contact with the user. Or something like that. You’ll carefully design and build your content types and taxonomies, and then find that the users are actually not quite using what you’ve built in quite the way it was intended when you were building it.

And so there comes a point where you need to grit your teeth, change the structure of the site’s content, and convert existing content.

Back on Drupal 7, I wrote a plugin for Migrate which handled migrations within a single Drupal site, so for example from nodes to a custom entity type, or from one node type to another. (The patch works, though I never found the time to polish it sufficiently to be committed.)

On Drupal 8, without the time to learn the new version of Migrate, I recently had to cobble something together quickly.

Fortunately, this was just changing the type of some nodes, and where all the fields were identical on both source and destination node types. Anything more complex would definitely require Migrate.

First, I created the new node type, and cloned all its fields from the old type to the new type. Here I took the time to update some of the Field Tools module’s functionality to Drupal 8, as it pays off to have a single form to clone fields rather than have to add them to the new node type one by one.

Field Tools also copies display settings where form and view modes match (in other words, if the source bundle has a ‘teaser’ display mode configured, and the destination also has a ‘teaser’ display mode that’s enabled for custom settings, then all of the settings for the fields being cloned are copied over, with field groups too).

With all the new configuration in place, it was now time to get down to the content. This was plain and simple a hack, but one that worked fine for the case in question. Here’s how it went…

We basically want to change the bundle of a bunch of nodes. (Remember, the ‘bundle’ is the generic name for a node type. Node types are bundles, as taxonomy vocabularies are bundles.) The data for a single node is spread over lots of tables, and most of these have the bundle in them.

On Drupal 8 these tables are:

- the entity base table

- the entity data table

- the entity revision data table

- each field data table

- each field data revision table

(It’s not entirely clear to me what the separation between base table and data table is for. It looks like it might be that base table is fields that don’t change for revisions, and data table is for fields that do. But then the language is on the base table, and that can be changed, and the created timestamp is on the data table, and while you can change that, I wouldn’t have thought that’s something that has past values kept. Answers on a postcard.)

So we’re basically going to hack the bundle column in a bunch of tables. We start by getting the names of these tables from the entity type storage:

$storage = \Drupal::service('entity_type.manager')->getStorage('node');

// Get the names of the base tables.

$base_table_names = [];

$base_table_names[] = $storage->getBaseTable();

$base_table_names[] = $storage->getDataTable();

// (Note that revision base tables don't have the bundle.)

For field tables, we need to ask the table mapping handler:

$table_mapping = \Drupal::service('entity_type.manager')->getStorage('node')

->getTableMapping();

// Get the names of the field tables for fields on the service node type.

$field_table_names = [];

foreach ($source_bundle_fields as $field) {

$field_table = $table_mapping->getFieldTableName($field->getName());

$field_table_names[] = $field_table;

$field_storage_definition = $field->getFieldStorageDefinition();

$field_revision_table = $table_mapping

->getDedicatedRevisionTableName($field_storage_definition);

// Field revision tables DO have the bundle!

$field_table_names[] = $field_revision_table;

}

(Note the inconsistency in which tables have a bundle field and which don’t! For that matter, surely it’s redundant in all field tables? Does it improve the indexing perhaps?)

Then, get the IDs of the nodes to update. Fortunately, in this case there were only a few, and it wasn’t necessary to write a batched hook_update_N().

// Get the node IDs to update.

$query = \Drupal::service('entity.query')->get('node');

// Your conditions here!

// In our case, page nodes with a certain field populated.

$query->condition('type', 'page');

$query->exists(‘field_in_question’);

$nids = $query->execute();

And now, loop over the lists of tables names and hack away!

// Base tables have 'nid' and 'type' columns.

foreach ($base_table_names as $table_name) {

$query = \Drupal\Core\Database\Database::getConnection('default')

->update($table_name)

->fields(['type' => 'service'])

->condition('nid', $service_nids, 'IN')

->execute();

}

// Field tables have 'entity_id' and 'bundle' columns.

foreach ($field_table_names as $table_name) {

$query = \Drupal\Core\Database\Database::getConnection('default')

->update($table_name)

->fields(['bundle' => 'service'])

->condition('entity_id', $service_nids, 'IN')

->execute();

}

Node-specific tables use ‘nid’ and ‘type’ for their names, because those are the base field names declared in the entity type class, whereas Field API tables use the generic ‘entity_id’ and ‘bundle’. The mapping between these two is declared in the entity type annotation’s entity_keys property.

This worked perfectly. The update system takes care of clearing caches, so entity caches will be fine. Other systems may need a nudge; for instance, Search API won’t notice the changed nodes and its indexes will literally need turning off and on again.

Though I do hope that the next time I have to do something like this, the amount of data justifies getting stuck into using Migrate!